|

I'm a PhD student in 3D Vision lab at Seoul National University, advised by Prof. Young Min Kim. I was a research intern at Snap Research. My research focuses on building practical motion generation systems that enable controllable and robust human motion modeling. Recently, I have been focusing on egocentric motion reconstruction and text-to-motion generation./ CV / LinkedIn / Google Scholar |

|

|

Research Keywords: Character Animation, Human Interaction, Controllable Motion Synthesis, Robust Motion Reconstruction. |

|

Chuan Guo, Inwoo Hwang, Jian Wang, and Bing Zhou NeurIPS, 2025 arxiv | project page Large-scale text-motion dataset featuring high-quality motion capture data paired with accurate, expressive textual annotations. |

|

Inwoo Hwang, Bing Zhou, Young Min Kim, Jian Wang, and Chuan Guo ICCV, 2025 (Highlight) arxiv | project page Modeling Human-Scene Interaction (HSI) as scene-aware motion in-betweening, and supports various practical applications including video-based HSI reconstruction. |

|

Inwoo Hwang, Jinseok Bae, Donggeun Lim, and Young Min Kim ICCV, 2025 arxiv | project page A controllable motion synthesis pipeline with high quality and precision, from explicit or implicit control signals, including time-agnostic motion control. |

|

Jinseok Bae, Inwoo Hwang, Young Yoon Lee, Ziyu Guo, Joseph Liu, Yizhak Ben-Shabat, Young Min Kim, and Mubbasir Kapadia ICCV, 2025 arxiv | project page A sparse keyframe-based motion diffusion model that better captures text prompts and improves overall motion quality. |

|

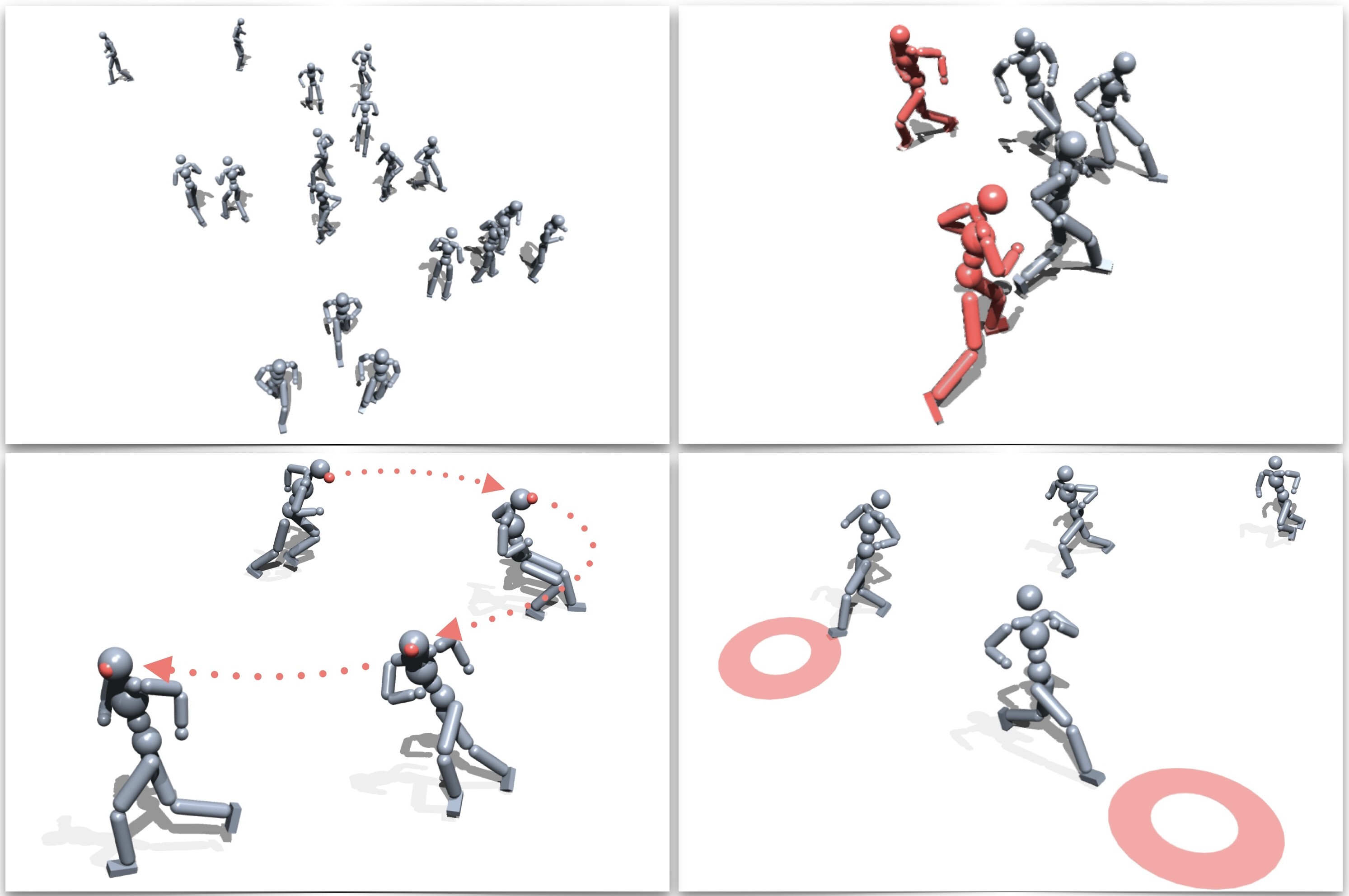

Donggeun Lim, Jinseok Bae, Inwoo Hwang, Seungmin Lee, Hwanhee Lee, and Young Min Kim ICCV, 2025 arxiv | project page A framework that creates a lively virtual dynamic scene with contextual motions of multiple humans. |

|

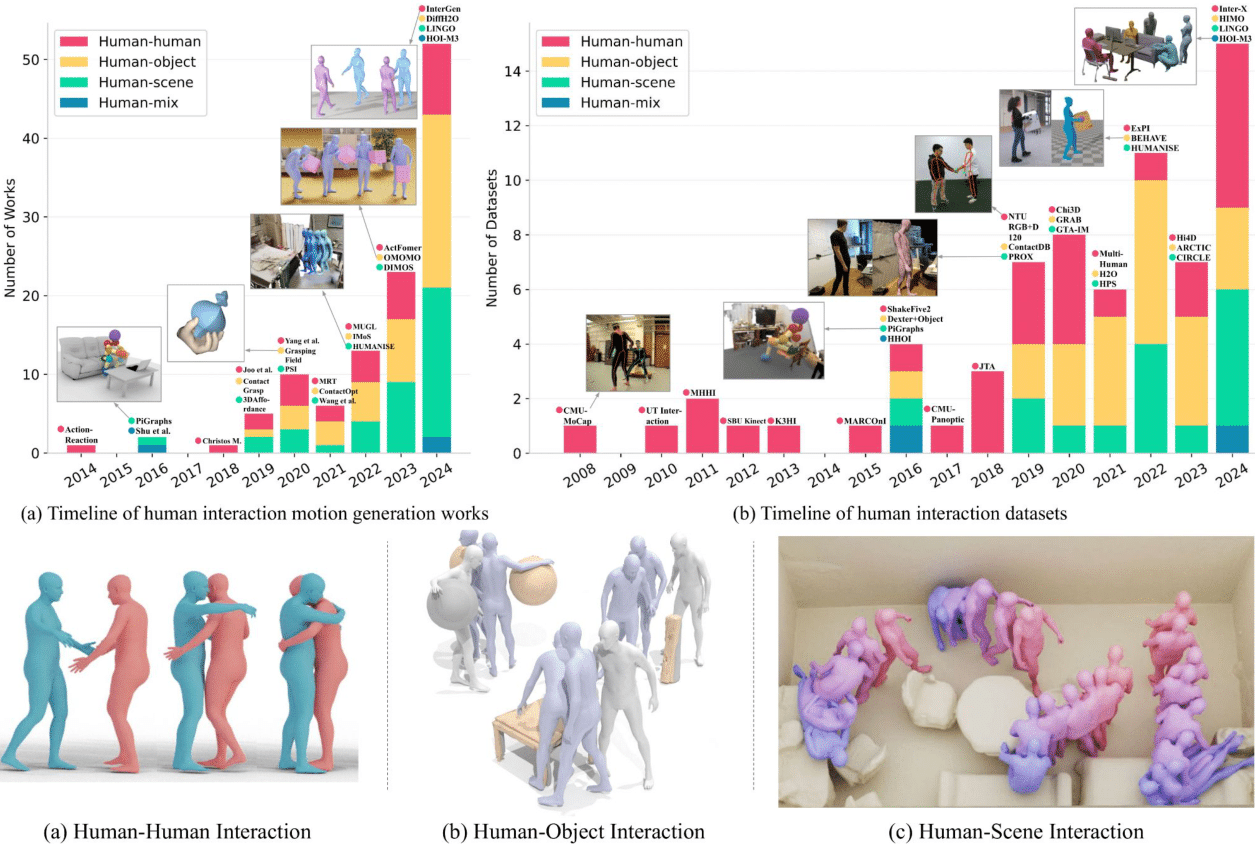

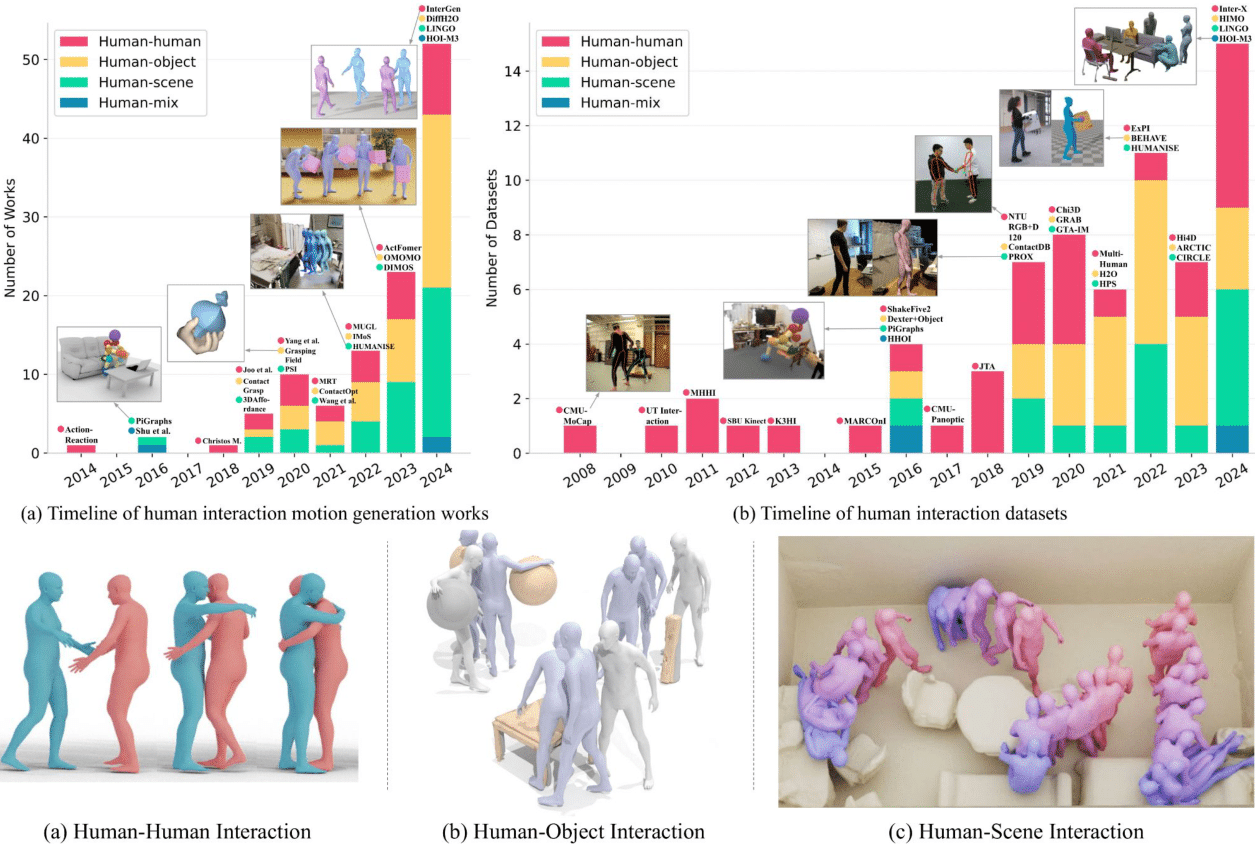

Kewei Sui, Anindita Ghosh*, Inwoo Hwang*, Bing Zhou, Jian Wang, and Chuan Guo IJCV, 2025 arxiv | project page A review of recent advances in human interaction motion generation, including human-human, human-object, human-scene, and human-mix interactions. |

|

Inwoo Hwang, Jinseok Bae, Donggeun Lim, and Young Min Kim CVPRW, 2025, HuMoGen paper | project page A motion generation pipeline from predefined key joint goal positions and a 3D environment. |

|

Jinseok Bae, Jungdam Won, Donggeun Lim, Inwoo Hwang, and Young Min Kim Eurographics, 2025 arxiv | paper | project page Propose integrating continuous and discrete latent representations, enabling physically simulated characters to efficiently utilize motion priors and adapt to diverse challenging control tasks. |

|

Inwoo Hwang, Hyeonwoo Kim, and Young Min Kim CVPR, 2023 (Highlight) arxiv | paper | video | project page A method to automatically create realistic and part-aware textures for virtual scenes composed of multiple objects. |

|

Inwoo Hwang, Hyeonwoo Kim, Donggeun Lim, Inbum Park, and Young Min Kim Eurographics short, 2023 paper | video A method to process sparse, noisy point cloud input and generate high-quality stylized output. |

|

Inwoo Hwang, Junho Kim, and Young Min Kim WACV, 2023 arxiv | paper | video | project page A Neural Radiance Field (NeRF) derived from event data, which serves as solutions for various event-based applications and highly robust to sensor noise. |

|

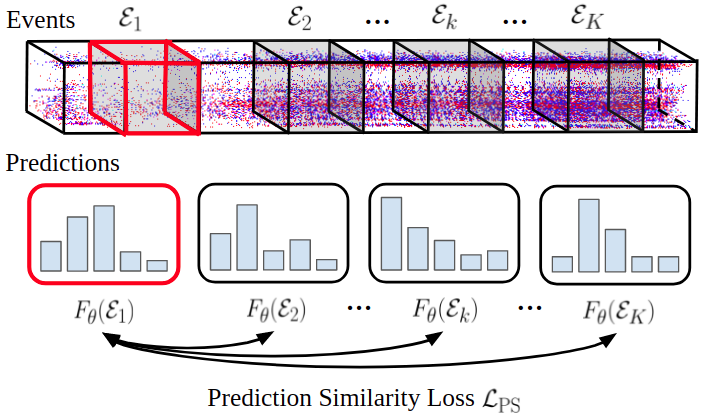

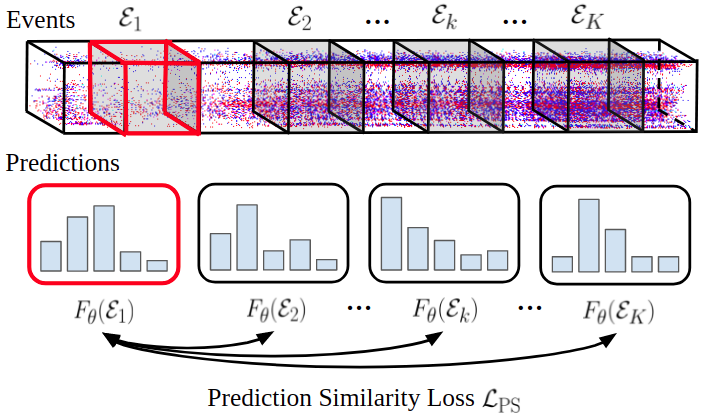

Junho Kim, Inwoo Hwang, and Young Min Kim CVPR, 2022 arxiv | paper | video | project page A simple and effective test-time adaptation algorithms for event-based object recognition. Successfully adapt classifiers to various external conditions. |

|

Junho Lee, Junhwa Hur, Inwoo Hwang, and Young Min Kim IROS, 2022 paper | video A real world grasping algorithm that can generalize to transparent and opaque obejcts via masks. |

|

|

|

Research Scientist Intern, Computational Imaging Team May 2024 - Sep. 2024 Mentors: Bing Zhou, Chuan Guo, Jian Wang Working on physically plausible reconstruction of human motion and scenes from real-world videos. |

Honors and AwardsExcellent Research Talent Fellowship, from BK21, 2023 fall Hyundai Motor Chung Mong-Koo Scholarship, 2022 to current University Mathematics Competition, Field 1 for Mathematics Major, Gold Medal, 2020 President Science Scholarship (Field: Mathematics), 2016 to 2021 Final Korean Mathematical Olympiad, Excellence award, 2015 |

EducationSeoul National University, Seoul, Korea, Mar. 2022 - Present M.S./Ph.D. in Electrical and Computer Engineering Seoul National University, Seoul, Korea, Mar. 2016 - Feb. 2022 B.S. in Electrical and Computer Engineering Seoul Science High School, Seoul, Korea, Mar. 2013 - Feb. 2016 |

Academic ActivitesConference Reviewer: CVPR, ICCV, NeurIPS, 3DV, ICRA Journal Reviewer: RA-L |

|

Design / source code from Jon Barron's |